![2.5GB of video memory missing in TensorFlow on both Linux and Windows [RTX 3080] - TensorRT - NVIDIA Developer Forums 2.5GB of video memory missing in TensorFlow on both Linux and Windows [RTX 3080] - TensorRT - NVIDIA Developer Forums](https://global.discourse-cdn.com/nvidia/original/3X/f/0/f09a7a602c7a57bad5c5b8bca723ddedcda69bc2.png)

2.5GB of video memory missing in TensorFlow on both Linux and Windows [RTX 3080] - TensorRT - NVIDIA Developer Forums

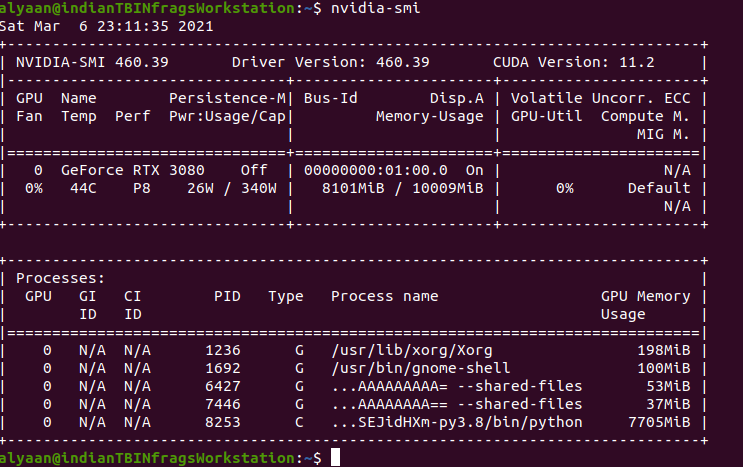

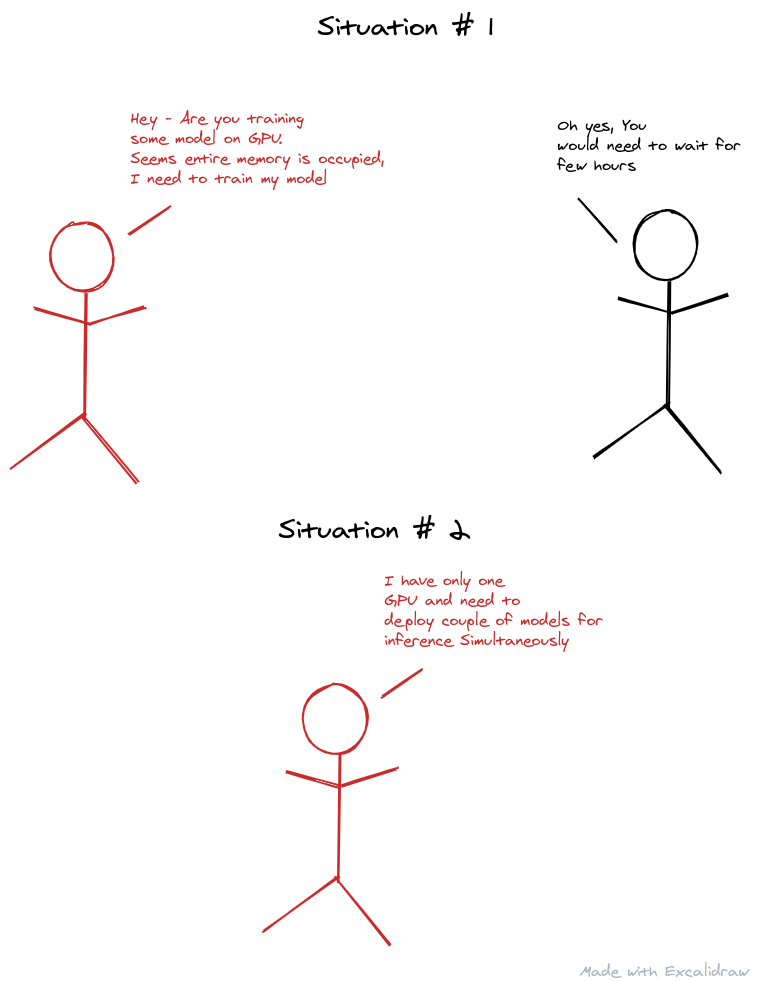

Memory Hygiene With TensorFlow During Model Training and Deployment for Inference | by Tanveer Khan | IBM Data Science in Practice | Medium

Memory Hygiene With TensorFlow During Model Training and Deployment for Inference | by Tanveer Khan | IBM Data Science in Practice | Medium

Error allocator -gpu when execute vgg16 100 epoche and 16 bache size - General Discussion - TensorFlow Forum

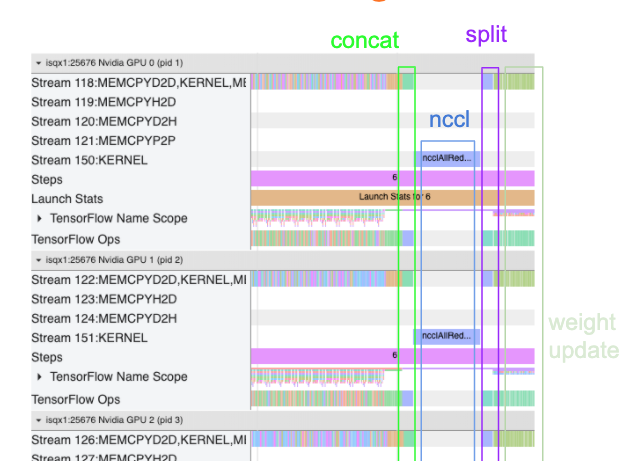

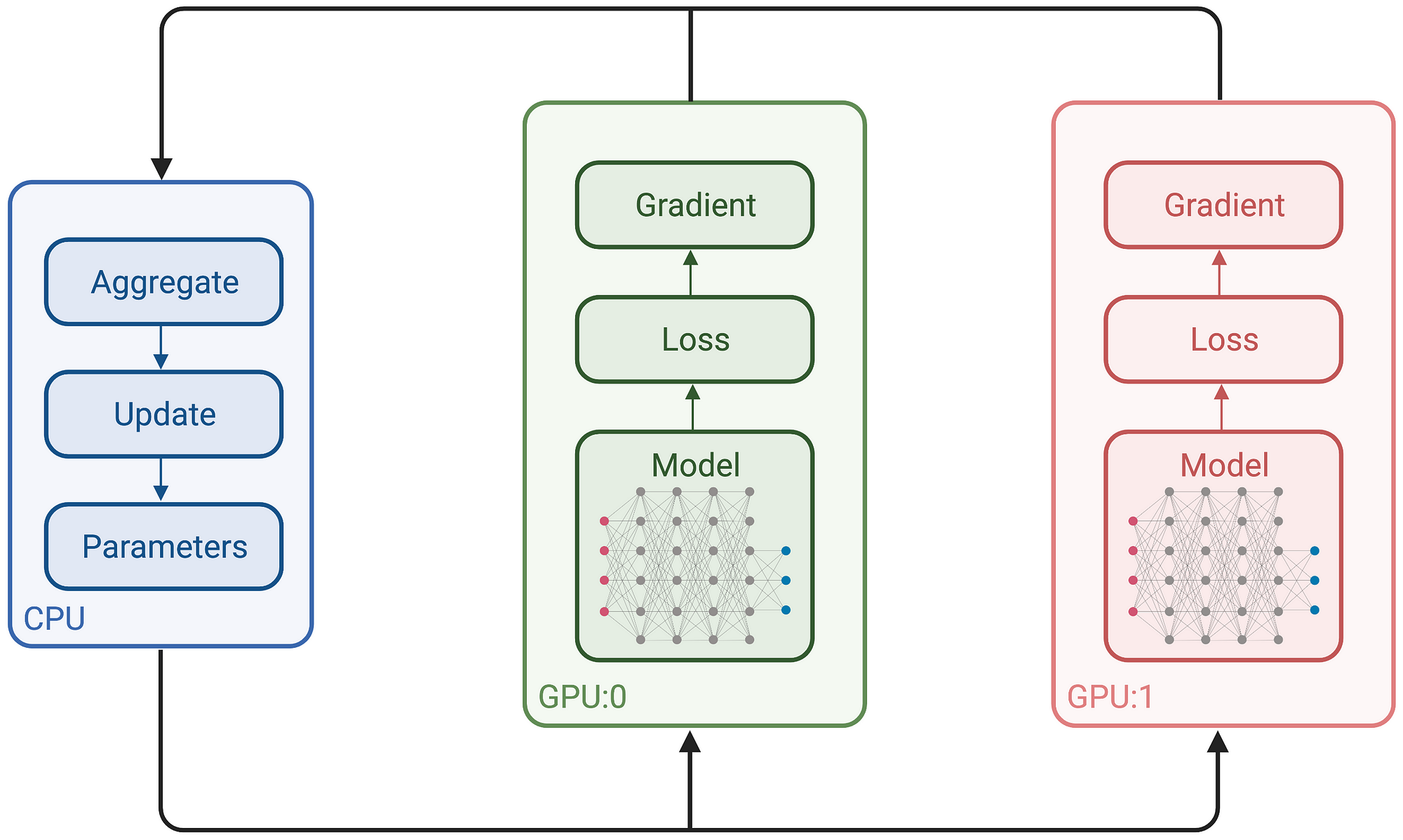

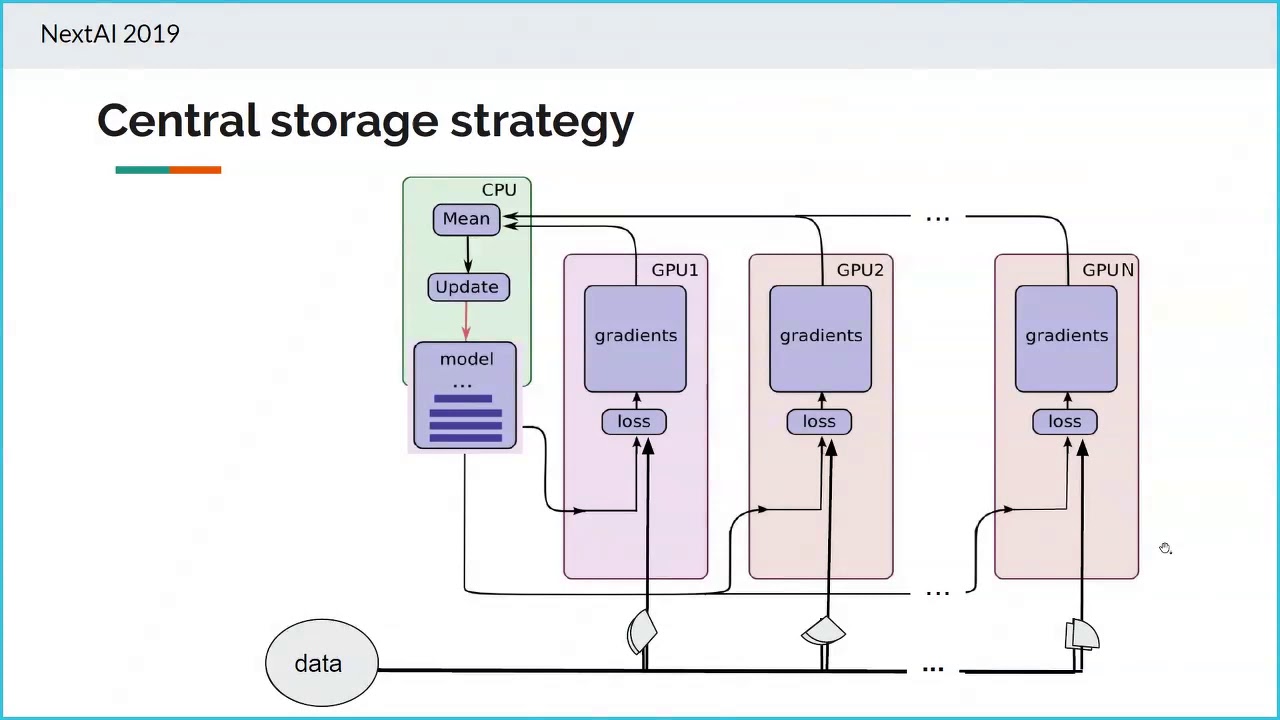

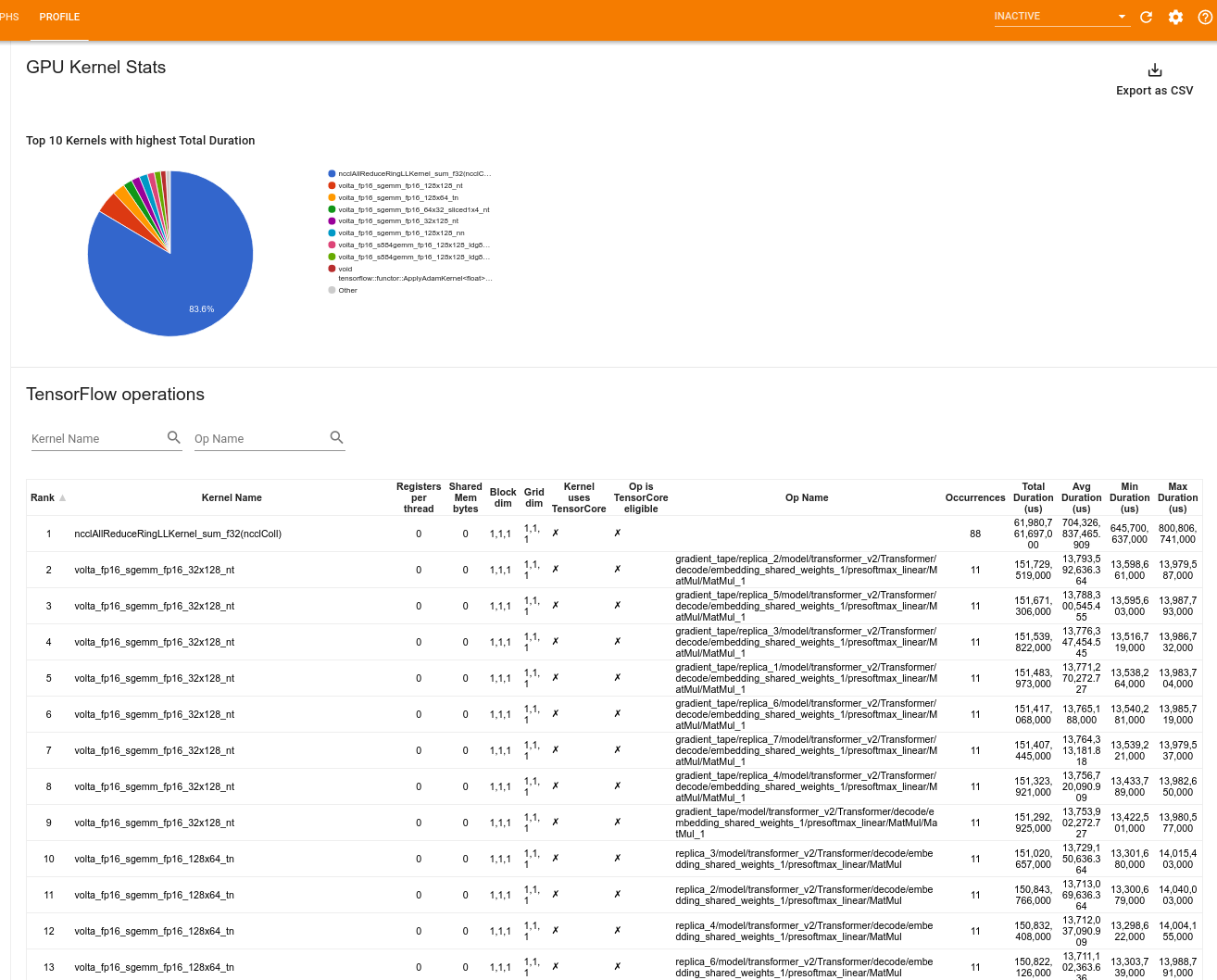

Best practices for TensorFlow 1.x acceleration training on Amazon SageMaker | AWS Machine Learning Blog

TensorFlow Performance with 1-4 GPUs -- RTX Titan, 2080Ti, 2080, 2070, GTX 1660Ti, 1070, 1080Ti, and Titan V | Puget Systems